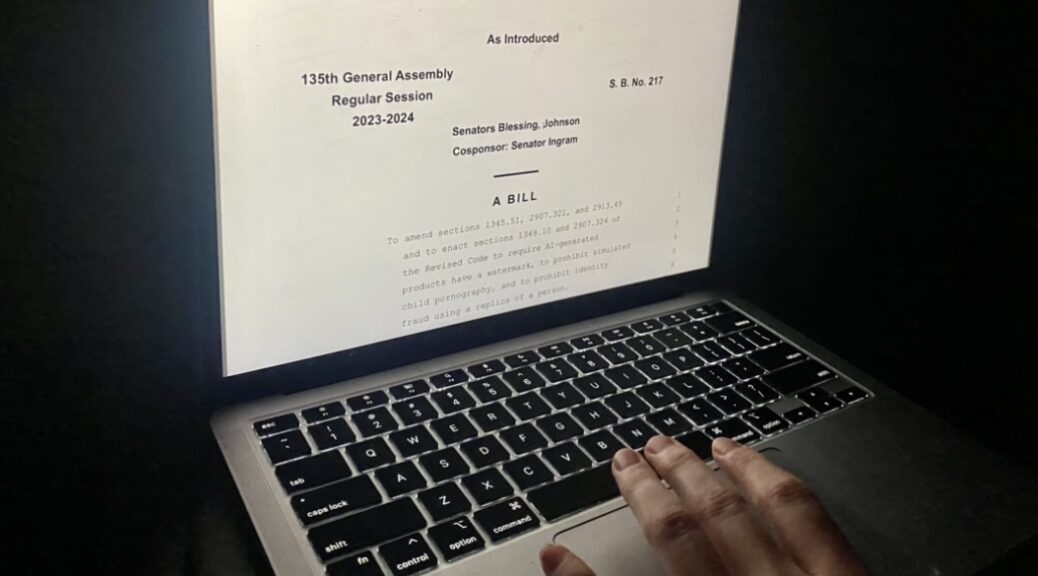

Senate Bill 217: How a proposed bill could affect the future of artificial intelligence

As artificial intelligence becomes more accessible, the loophole that allows for the creation of sexually explicit material of children, or of adults that have not consented, becomes more prominent.

Ohio lawmakers hope to change this with the introduction of Senate Bill 217, a bill that would criminalize the act of creating sexually obscene material of children or non-consenting adults. The proposed bill will also require artificial intelligence content to have a watermark on it so that people know it was created with artificial intelligence.

This proposal comes on the heels of pop star Taylor Swift falling victim to a series of sexually explicit and non-consensual photos depicting her likeness, created by artificial intelligence. The photos gathered large amounts of attention and traction to the websites they were posted on, and Swift’s limited legal options prompted Ohio lawmakers to address the gray area of the law where artificial intelligence is concerned, and how this could allow criminals to escape any charges.

“ I believe artificial intelligence can be a gray area when it comes to the law due to the constant changes and updates being made to artificial intelligence, and what has not yet been discovered with its capabilities,” said Julian Wagner, Hamilton Country Courthouse Legal Intern. “It is unprecedented in the way it can recreate likeness to the degree it has done.”

“ I believe artificial intelligence can be a gray area when it comes to the law due to the constant changes and updates being made to artificial intelligence, and what has not yet been discovered with its capabilities,”

Julian Wagner, Hamilton Country Courthouse Legal Intern.

The gray area concerning artificial intelligence and child pornography laws is a subject that was breached in 2001, during Ashcroft V. Free Speech Coalition, a Supreme Court case that ultimately voted that the decision to ban computer-generated images that appear to be sexually explicit images of a minor to be unconstitutional, as the definition was too vague.

The court’s decision to not ban virtual child pornography, as was presented by the Child Pornography Prevention Act of 1996, was partially due to the language used in the proposition. As computer-generated images, as well as artificial intelligence, depict the appearance of a person rather than the actual person, the 2001 proposition included the words “appears to be” and “conveys the impression.”

In its thirteen-page proposal, Senate Bill 217 used the language “simulated obscene material,” clarifying that artificial intelligence falls within the bounds of simulated obscene material. This clarification can prevent the bill from being discarded due to vague language, as it defines clear parameters of what can be considered child pornography in relation to artificial intelligence in a way that has not been defined before.

Ashcroft V. Free Speech Coalition set the precedent for laws regarding child pornography and computer-generated material, as the court’s decision caused computer-generated child pornography to be considered constitutionally protected speech under the First Amendment. With the proposal of Senate Bill 217, the topic of First Amendment rights is pertinent.

“I believe that depending on the restrictions implemented against AI by the bill, we could see some circumstantial issues arise with First Amendment rights. However, if it causes harm to another individual or group, it would be appropriate to take action,” said Wagner.

Senate Bill 217 faces additional controversy due to the proposed requirement for artificial intelligence-generated images to include a watermark making it clear that the image was created using artificial intelligence.

“I think that AI should have a watermark and that it’s important for people to understand if something was generated by AI,” said Eyas Alarayshi, Messer Construction Cybersecurity specialist. “As AI faces new advancements it becomes harder to tell if pictures and videos are real or AI-generated. While this is innovative and exciting for the future of AI and technology, it’s definitely scary when you consider how this can deceive people if the intent is to harm.”

“As AI faces new advancements it becomes harder to tell if pictures and videos are real or AI-generated.”

Eyas Alarayshi, Messer Construction Cybersecurity specialist.

Senate Bill 217 aims to make the distinction between artificial intelligence-generated images and real images immediately clear so that there is no confusion. The bill would also make the removal of such a watermark liable to civil action, including a penalty of up to ten thousand dollars.

“Sometimes having a watermark could significantly diminish user experience. This could encroach on the individual’s right to express. If someone goes through the trouble of designing and building their own convolutional neural network and wants to make artwork out of it, it feels unfair to make them stamp a watermark on it.” said Luke Allen, a machine learning student at The Ohio State University.

While there has been backlash regarding the watermark proposal, Senate Bill 217 would require artificial intelligence systems to automatically include a watermark on all material created using the system, and individuals would only be responsible if they remove the watermark with the intention to conceal the artificial intelligence origin.

The inclusion of a watermark could prevent any confusion towards artificial intelligence images explicit or otherwise, and could be an important inclusion when public figures images are being depicted, such as the photos depicting Taylor Swift.

If passed, Senate Bill 217 would set a new standard for explicit content created with artificial intelligence and could fill in the gray area that pervades artificial intelligence law.